DeepSeek: A Non-Tech Language Explanation Of What Actually Happened

Misinformation everywhere

Hi investors👋

If you missed the big news today…there’s a small Chinese AI startup called DeepSeek AI that wiped out $2 trillion from the US equity markets and caused NVDA, the third largest company on the earth, to drop 17% - wiping out $615 billion in market cap.

Fact: This loss in market cap today is more than the total value of companies like Nike, FedEx, CVS, Chipotle, Delta, and Target. Mental.

But is this is a big breakthrough or is there a load of misinformation flying around?

The answer is both. Here’s why👇

Before I dive into the crux of this article, I just want to take a moment to remind my readers that I’m offering a 20% discount to my paid tier. That’ll be just $14 a month.

Please do consider subscribing here👇

Here’s the news that’s been flying around today:

DeepSeek is basically a competitor to Chat GPT, but at much lower costs. Reportedly, DeepSeek AI have only $5 million in funding so if you compare this to Open AI (at $10 billion investment in the last 4 months), it’s about 2,000x cheaper.

Reportedly, DeepSeek AI has also only been around since 2023, and is led by a fund manager who has basically ran DeepSeek on the side.

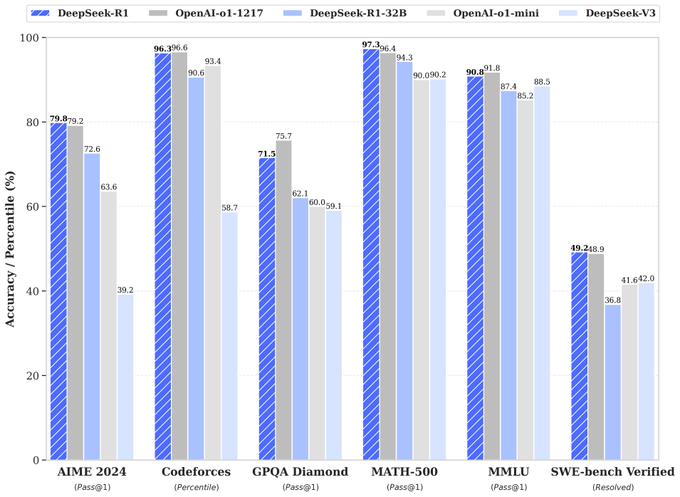

Initial research shows that DeepSeek does a few things better than Chat GPT, and a few things slightly worse…but for a 2,000x reduction in price this is probably a pretty quite a good thing.

All this led to “doomsday AI” moments, “China is winning the AI race” etc. But let’s not jump to conclusions. There’s been very mixed messages I’ve seen online from high profiles. For example, Marc Andreesen posted:

Chamath posted:

But then we’ve had Bernstein analysts saying:

“DeepSeek did NOT build build their models for $5 million”

“DeepSeek models are much smaller than OpenAI”

“DeepSeek models are not a miracle”

So here’s an unbiased opinion of exactly what’s gone on today and what will likely happen.

What is DeepSeek?

DeepSeek was founded by Liang Wenfeng in 2023. He’s a quant hedge fund trader and he runs DeepSeek with ~200 employees. The team started out as a quant trading hedge fund but when Xi cracked down on this area, they turned their mathematical and engineering expertise to DeepSeek. This team has reportedly managed to train their R1 model for ~$5-6 million using only 2,048 H800 NVDA chips. This is wrong and this is the first thing I want to discuss.

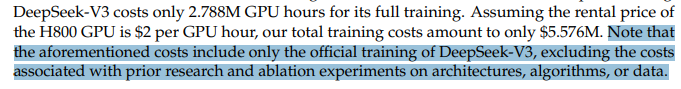

I think a lot of people have come to disbelieve the fact that DeepSeek used just 2,048 chips as they “claimed” because as we can see in this note below “the aforementioned costs include only the official training of DeepSeek-V3, excluding the costs associated with prior research and ablation experiments on architectures, algorithms, or data.”

Alexandr Wang of Scale AI believes this number is ~50,000 H800 chips and insists they’ve tried to say its 2,048 because they are still getting these NVDA US Chips despite the U.S. chip ban on China. It’s highly likely as I’ll touch on later briefly that there’s been:

Export control time lags, chip smuggling, and loopholes to get more chips in than they are saying.

A huge amount of chips required for “trial and error” to develop their efficiency models that then allowed them to train a model on 2,048 chips.

The total chips used will be nowhere near 2,048.

There’s opinions everywhere here on exactly how many chips DeepSeek used but what we can know for almost certain, is that DeepSeek have used considerably less chips (and capital) than what we have become used to in AI. This was bound to happen at some point as with everything…first is rapid innovation gains and second is rapid efficiency gains. DeepSeek have been the first big movers in efficiency gains it seems.

We’ve become so used to spending millions of dollars on various training models and really pushing the limits of innovation. However, little focus has yet to come on developing the most efficient, cost-effective, and low power models.

So how does DeepSeek work (very simply explained)?

Simply, DeepSeek has managed to make the OpenAI model smaller and more concise, lowering the cost, whilst keeping most of the performance more or less the same which is very impressive as NVDA spokesperson said a few hours ago:

“DeepSeek’s work shows how new models can be created, leveraging widely-available models and compute that is fully export control compliant".”

Translation: “DeepSeek is innovating well and following regulatory standards, but we aren’t worried.”

How have they done this?

They used 8-bit floating point numbers instead of 32-bit. This means much less memory is needed. They managed to maintain quality through breaking numbers into smaller tiles only when very necessary.

The model stopped storing every single parameter, and only stored the most important ones.

Result? 95% reduction in cost.

What does this mean for the industry?

Firstly, I think it’s very important to give credit where credit is due. This is innovation that we haven’t seen before. However, here’s the reality.

These efficiency gains that DeepSeek have managed are going to allow other companies (in the US) to build even more powerful models on these DeepSeek philosophies. The fact that DeepSeek managed to train their V3 model with 2,048 H800 chips is extremely bullish for the AI / chip industry in general. If they can train their models using a small number of chips, imagine what the possibilities are for companies with 200,000+ chips.

Note how I put “in the US” in the above paragraph. DeepSeek would have managed to get their hands on these chips (50,000+ in total) in the time around the chip restriction. Unfortunately, it’s not as easy as simply enforcing a chip ban and then having 0 chips go to China. There’s plenty of loopholes, and chip smuggling (likely the reason DeepSeek tried to claim only 2,048 chips). However, the next stages of innovation for DeepSeek and other Chinese firms will no doubt require more chips (hundreds of thousands) no matter how efficient they can become at training models. Today’s export controls will make this extremely difficult today and I sense this will only get more difficult over the next 4 years.

DeepSeek have also acknowledged this saying there’s a “twofold gap in model structure” meaning DeepSeek would have to “consume twice the computing power to achieve the same results.” So I do sense some difficulty coming for DeepSeek meaning this news today is likely very thought provoking for the wider industry, but it does not put DeepSeek in a dominant position at all.

My Take

At the end of the day, the true value creation over the next decade is going to be with AGI (Artificial General Intelligence). DeepSeek want to do this of course, but as I touched on above there’s going to be a huge limit on the chips they are able to access which will make this a considerably bigger challenge than for US tech firms.

With this being said, the likes of NVDA, ASML, TSM, MU, etc etc all of which lost billions in market cap today will be absolutely fine long term. To achieve AGI, we will still need hundreds of thousands of chips.

However, what is bound to happen is finally a realization that this immense spending on chips (without too much of a focus on efficiency gains) cannot continue. And with this I’m mainly talking about NVDA which has gross margins in the 75% range compared to competitors that are struggling for profitability or in the low mid teen to 20% gross profit margin range. NVDA customers are willing to pay these hefty fees today because NVDA GPUs are the best, and because companies had to make sure they got ahead of the field early on to avoid being left behind, but I think slowly over time, with DeepSeek as a catalyst, there’s going to be a shift towards performance per dollar, rather than performance. CapEx can’t just keep going up ridiculous amounts. DeepSeek has shown that it is possible to produce good results at far lower cost.

That’s also why mega caps like AMZN and GOOG for example are building out their own chip manufacturing centers for efficiency gains in the future.

And this is exactly why I think NVDA dropped 17% today vs competitors like AMD which only dropped 6%. The market is looking at future profit potential and seeing that NVDA has:

A very concentrated number of customers who are all building their own internal chips (AMZN, MSFT, OpenAI, GOOG).

A gross margin that is no doubt going to get pressured for a while.

An extremely high valuation.

The positive part to note is that NVDA will still be in a dominant position for years to come, especially in the likes of quantum or robotics so the TAM is ginormous for them to continue being one of the most successful companies in the world. But just expect some margin pressure I think over the next couple of years.

So is NVDA a good investment today? The big money has been made on NVDA and I have no doubt about that. I don’t think this is “doomsday for NVDA” like many headlines suggest, but I do think there’s going to be a lot of focus on efficiency over the next period and customers won’t go to NVDA if they’re looking to expand their margins.

Will NVDA go up over the next 5 years? Yes.

Are there potentially better investments? I think so…but I’m by no means bearish on NVDA.

So where would I be looking?

The core of AI will continue to be TSM and ASML. Without these companies there are no chips in the first place. Subscribers will know I invest in ASML.

Software like SNOW, PLTR, CRWD, S

Many other areas that I’ll discuss in this newsletter

That’s all for today

I do hope you enjoyed this quick post on NVDA and DeepSeek. If there’s any feedback or additional information that you think would be necessary, please do reach out to me and let me know or leave a comment below.

Next deep dive is already being prepared…any guesses on what it will be?

Disclaimer

Disclaimer: The Accuracy of Information and Investment Opinion

The content provided on this page by the publisher is not guaranteed to be accurate or comprehensive. All opinions and statements expressed herein are solely those of the author.

Publisher's Role and Limitations

Make Money, Make Time serves as a publisher of financial information and does not function as an investment advisor. Personalized or tailored investment advice is not offered. The information presented on this website does not cater to individual recipient needs.

Not Investment Advice

Best reporting I've seen on this, thank you!

Really useful information, extremely valuable. Thank you!